Genie 3 is Google DeepMind’s groundbreaking general-purpose “world model” that generates fully interactive, physically consistent 3D environments from simple text prompts in real time, marking a major step toward embodied AI and AGI.

Unlike passive video generators, it creates explorable worlds that respond to user actions over several minutes, using an 11B-parameter auto-regressive transformer trained on 200,000+ hours of video to learn physics, dynamics, and coherence without hard-coded rules. Running at 720p and 24 FPS on TPU v5s, Genie 3 enables rapid game prototyping, large-scale robotics and AI agent training, immersive education, scientific visualization, disaster simulation, and film pre-visualization.

While it still has limits in interaction depth, multi-agent complexity, and long-duration consistency, its ability to instantly generate diverse, interactive environments positions world models as a core foundation for future AI systems and signals a shift from static content generation toward truly experiential, learning-driven artificial intelligence.

You should also read Showeblogin article for Google Veo 3: DeepMind’s Cinematic Video Generator.

| Category | Details |

|---|---|

| Model Name | Genie 3 |

| Developer | Google DeepMind |

| Model Type | General-purpose world model |

| Core Capability | Generates real-time, interactive 3D worlds from text |

| Architecture | 11B-parameter auto-regressive transformer |

| Resolution | 720p (1280×720) |

| Frame Rate | 24 FPS (real time) |

| Interaction Duration | Several minutes of consistent simulation |

| Physics | Learned (no hard-coded physics engine) |

| Training Data | 200,000+ hours of video |

| Memory | ~1 minute of visual scene retention |

| Hardware | Google TPU v5 |

| Key Use Cases | Game prototyping, robotics training, AI agents, education, simulation |

| Differentiator | Persistent, explorable worlds vs. passive video generation |

| Availability | Limited via Google Labs “Project Genie” |

| AGI Relevance | Enables embodied learning and scalable world simulation |

Genie 3: The Breakthrough AI World Model Transforming Game Development, Robotics, and AGI Research

Google DeepMind has unveiled Genie 3, a revolutionary general-purpose world model that generates fully interactive 3D environments from simple text prompts in real time. This breakthrough technology represents a seismic shift in artificial intelligence, creating what researchers describe as a “stepping stone toward AGI” by enabling machines to simulate, understand, and learn from diverse virtual worlds at unprecedented speed and scale.

Unlike passive video generators like OpenAI’s Sora, which produce pre-determined sequences, Genie 3 creates living, explorable worlds that respond to user actions and persist consistently across multiple minutes of real-time interaction. The implications are staggering: unlimited training environments for AI agents, instant game prototyping, immersive educational simulations, and a fundamental new approach to developing artificial general intelligence.

This comprehensive guide explores Genie 3’s capabilities, how it works, practical applications across industries, and what this breakthrough means for the future of AI development.

What Is Genie 3? Understanding DeepMind’s World Model

Genie 3 is a foundation world model—an AI system trained to understand, simulate, and predict how physical environments evolve over time. Rather than generating fixed images or videos, it constructs interactive 3D worlds pixel-by-pixel, frame-by-frame, with the ability to respond dynamically to user input and maintain physical consistency.

The model processes text prompts like:

- “A volcanic landscape with realistic lava flows and dynamic terrain deformation”

- “Ancient Rome with explorable architecture and crowd simulations”

- “A forest environment for autonomous agent navigation training”

Within seconds, Genie 3 transforms these descriptions into fully explorable, physics-simulating environments rendered at 720p resolution at 24 frames per second.

Key Specifications

| Feature | Specification |

|---|---|

| Architecture | 11-billion-parameter auto-regressive transformer |

| Resolution | 720p (1280×720) |

| Frame Rate | 24 frames per second |

| Interaction Duration | Several minutes of consistent simulation |

| Visual Memory | Up to one minute of scene retention |

| Training Data | 200,000+ hours of video |

| Hardware Platform | Google TPU v5 infrastructure |

How Genie 3 Works: The Technology Behind Interactive Worlds

The Auto-Regressive Architecture

At its core, Genie 3 operates like an author writing a story one word at a time—except it writes worlds one frame at a time. This auto-regressive approach is revolutionary because each new frame is intelligently generated based on all previous frames, ensuring consistency and logical causality.

The process unfolds as follows:

- Text-to-Semantic Understanding: The model parses the text prompt to extract environmental parameters

- Iterative Frame Generation: Each new frame is rendered based on previous frames, current user input, and physics predictions

- Consistency Maintenance: A sophisticated memory system prevents visual contradictions

Emergent Physics Without Hard-Coded Rules

One of Genie 3’s most striking capabilities is learned physics. Rather than relying on traditional physics engines with explicit rules, DeepMind trained the model by exposing it to over 200,000 hours of videos, including gameplay footage and real-world recordings.

The model learned fundamental physics principles independently:

- Gravity and Motion: Objects fall and move realistically

- Collisions: Entities interact with proper bounce and friction

- Fluid Dynamics: Water flows, wind blows, lava behaves realistically

- Material Properties: Different surfaces reflect light and deform appropriately

Multi-Scale Attention Mechanisms

To maintain coherence across diverse scales, Genie 3 employs multi-scale attention:

- Local Consistency: Fine-grained details remain stable

- Global Coherence: Overall structure maintains logical consistency

- Hierarchical Understanding: Distant landscapes don’t contradict when approached

Real-Time Processing: TPU v5 Optimization

Genie 3 achieves 24 FPS performance by running on Google’s cutting-edge TPU v5 infrastructure. Each frame is generated in approximately 41 milliseconds, creating smooth, continuous simulation.

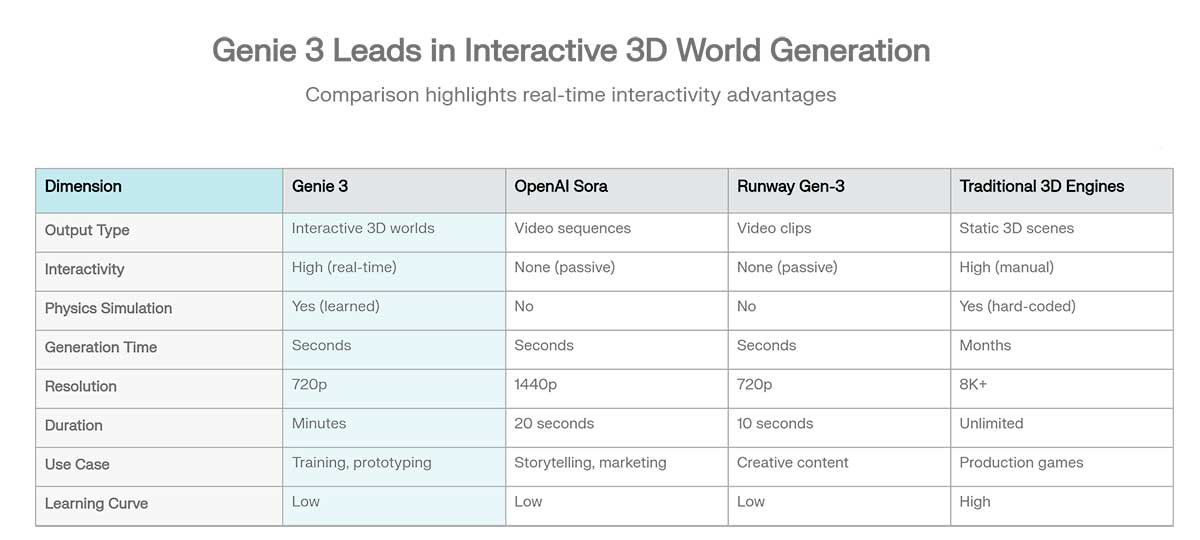

Genie 3 vs. Competitors: How It Stands Apart

The generative AI landscape includes several competing technologies for content creation. Genie 3’s positioning is distinct: it’s the only technology combining real-time interactivity with learned physics and environmental consistency spanning multiple minutes.

You should also read Tencent Hunyuan Video: The Open-Source AI Video Rival.

Key Advantages Over OpenAI Sora

While OpenAI’s Sora excels at photorealistic video generation, it produces passive sequences—viewers watch pre-determined narratives unfold. Genie 3 inverts this model by creating interactive environments where agents, humans, and AI systems can explore, make decisions, and experience genuine branching possibilities.

For game developers and roboticists, this distinction is critical. Sora cannot generate a warehouse environment where a robot learns to navigate; Genie 3 can.

Key Advantages Over Runway Gen-3

Runway Gen-3 specializes in hyper-realistic 10-second video clips with artistic control. However, these remain static sequences. Genie 3’s real-time physics simulation and minute-long consistency enable continuous interaction—essential for AI training scenarios where agents need to learn from prolonged exposure to diverse situations.

Key Advantages Over Traditional 3D Engines

Game development studios using Unreal Engine or Unity can spend months creating a single level. Genie 3 generates explorable environments in seconds. However, traditional engines provide unmatched fidelity. Genie 3’s strength lies in rapid prototyping, not production-grade final assets.

Real-World Applications: Where Genie 3 Creates Value

1. AI Agent and Robotics Training

The Challenge: Training autonomous systems requires exposure to countless real-world scenarios. Physical testing is expensive, dangerous, and time-consuming.

Genie 3’s Solution: Instantly generate diverse, physics-realistic training environments.

Example Use Case: A robotics company developing warehouse automation systems can use Genie 3 to:

- Generate 10,000 variations of warehouse layouts

- Train robots in each configuration before real-world deployment

- Simulate edge cases safely in simulation

- Reduce costly physical testing iterations

DeepMind’s own SIMA agent was tested within Genie 3 environments, successfully achieving complex goals like “approach the bright green trash compactor”.

2. Game Development and Level Design

The Challenge: Creating engaging game levels requires iterative design, asset creation, and playtesting—a months-long process per level.

Genie 3’s Solution: Designers instantly prototype level concepts and game mechanics.

This rapid iteration cycle compressed from weeks to minutes dramatically accelerates game development.

3. Educational Simulations and Immersive Learning

The Challenge: Teaching abstract concepts relies on static textbooks and videos—limiting student engagement and retention.

Genie 3’s Solution: Create interactive, explorable educational environments.

Example Simulations:

- “Ancient Rome”: Students explore the Forum and understand urban planning in context

- “Inside a Bakery Kitchen”: See bread baking processes at each stage

- “Solar System Explorer”: Navigate planets with accurate physics to understand orbital mechanics

- “Climate Change Scenario”: Manipulate environmental variables and observe ecosystem responses

4. Scientific Visualization and Research

The Challenge: Researchers visualizing complex phenomena rely on static renders or expensive specialized software.

Genie 3’s Solution: Generate interactive simulations of natural and physical phenomena.

Research Applications:

- Geology: Simulate tectonic plate interactions and mineral formation

- Meteorology: Model hurricane formation and atmospheric dynamics

- Biology: Visualize ecosystem interactions and habitat evolution

- Physics: Demonstrate quantum phenomena and particle collisions

Have you read? Luma AI Dream Machine: Free Text-to-Video Generation.

5. Film and Animation Pre-Visualization

The Challenge: Directors spend weeks in pre-visualization, storyboarding complex scenes and camera movements.

Genie 3’s Solution: Directors instantly generate realistic virtual sets and explore camera angles in real time.

This real-time visualization shortens pre-production cycles and enables more informed creative decisions before expensive on-set shooting.

6. Emergency Response and Disaster Training

The Challenge: First responders need experience in high-stakes scenarios but can only train with limited simulations.

Genie 3’s Solution: Generate realistic disaster scenarios for safe training.

Training Scenarios:

- Flooding with dynamic water physics

- Building collapse with debris physics

- Chemical plant explosion with environmental spread

- Wildfire with dynamic wind and terrain effects

Current Availability: How to Access Genie 3

Limited Research Preview (August 2025 – Present)

When DeepMind first announced Genie 3 in August 2025, access was restricted to a small cohort of trusted researchers and creators. This research preview allowed DeepMind to gather feedback and refine safety measures before broader release.

Project Genie: Public Rollout (January 2026 – Present)

Google has begun expanding access through Project Genie, an experimental prototype in Google Labs. As of January 2026:

Who Can Access:

- Google AI Ultra subscribers ($249.99/month)

- Users aged 18 and older

- Currently US-based (with plans to expand to additional territories)

How to Access:

- Subscribe to Google AI Ultra ($249.99/month)

- Visit Google Labs

- Select “Project Genie”

- Begin creating worlds

Current Capabilities:

- Text-to-world generation

- Real-time exploration at 24 FPS

- Promptable world events (modify environments dynamically)

- Multi-variant generation

You may try Wan AI 2.5: Cinematic Video with Open Weights.

Future Availability Timeline

DeepMind has explicitly stated its goal to “make these experiences and technology accessible to more users” beyond the current AI Ultra tier. While specific timelines remain undisclosed, industry observers expect:

- Mid-2026: Expanded access to non-Ultra subscribers

- 2027: Integration into Google’s consumer products

- 2028+: Potential open-source or API access for developers

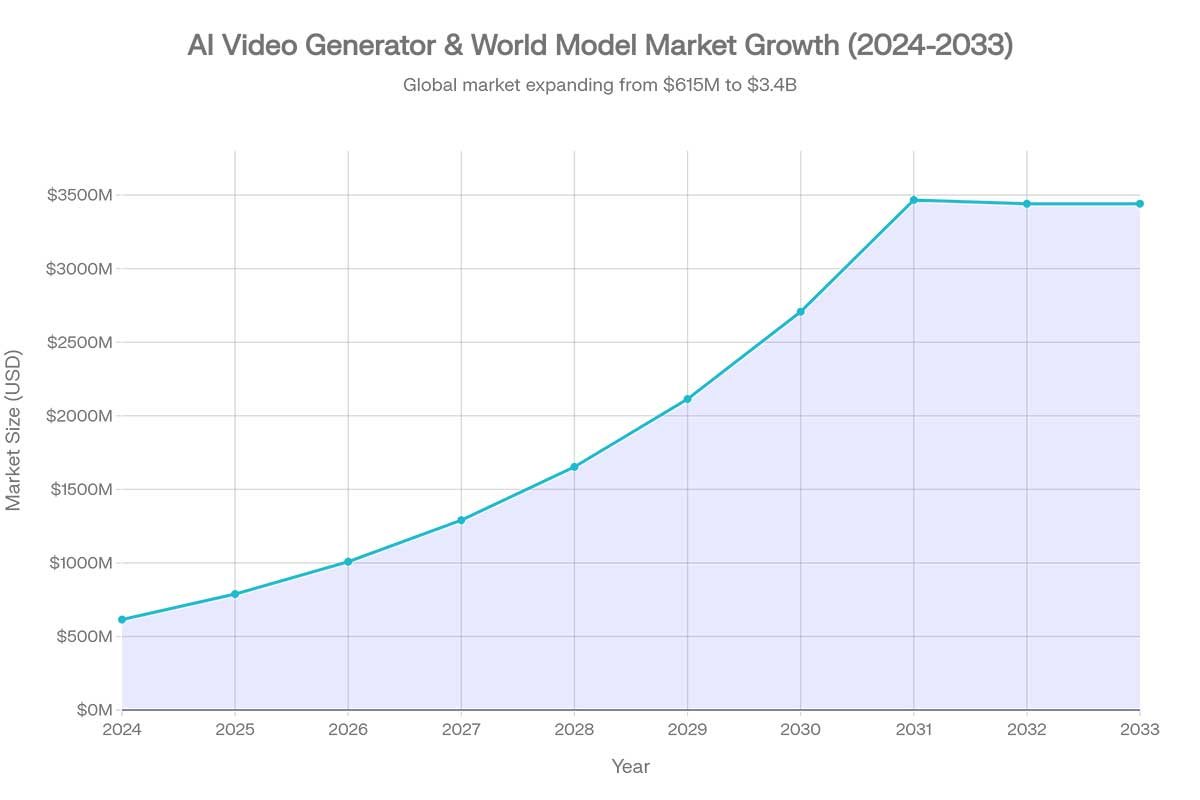

Market Context: The Explosive Growth of Generative AI

The AI video generator market was valued at $614.8 million in 2024 and is projected to reach $788.5 million in 2025, with expectations to exceed $3.4 billion by 2032—representing a compound annual growth rate (CAGR) of 20.3%.

Key market drivers:

- Content creators seeking faster production workflows

- Enterprises automating video generation for marketing

- Researchers requiring diverse training data

- Developers building AR/VR applications

- Educators creating interactive learning experiences

- Roboticists training autonomous systems

The fastest-growing segment is Asia-Pacific, which now represents 31% of the global AI video generation market, driven by large populations of content creators, tech-savvy demographics, and rapid social media adoption.

Limitations and Constraints: What Genie 3 Cannot (Yet) Do

While Genie 3 represents a revolutionary leap, current limitations are important for realistic assessment:

Consistency Duration (Several Minutes)

Genie 3 maintains physical and visual consistency for several minutes of continuous interaction—a 10x improvement over Genie 2’s 10-20 seconds. However, this falls short of the hours required for complex agent training or full game experiences.

Limited Text Rendering

Generated text within worlds is minimal and unreliable. Text is primarily rendered if explicitly specified in the initial prompt—dynamic text generation remains poor.

Action Space and Interaction Constraints

Genie 3 supports a defined set of interactions (camera movement, basic object manipulation, promptable world events), but the action space is more limited than full 3D engines.

Multi-Agent Complexity

Simulating complex interactions between multiple autonomous agents remains challenging.

Real-World Replication Accuracy

While Genie 3 can generate environments inspired by real locations, it cannot perfectly replicate specific buildings or landscapes with photographic accuracy.

The Path to AGI: Why Genie 3 Matters

DeepMind researchers repeatedly emphasize that Genie 3 is a stepping stone toward Artificial General Intelligence (AGI)—AI systems capable of reasoning, learning, and acting across diverse domains with human-like understanding.

The Embodied Intelligence Hypothesis

A foundational premise in AGI research is the embodied intelligence hypothesis: machines achieve genuine understanding and general capabilities only through interaction with diverse environments. Text-based learning alone proves insufficient.

Genie 3 addresses this bottleneck by:

- Unlimited Curriculum Environments: Agents trained across infinite scenario variations

- Self-Directed Learning: Agents explore, experiment, and learn from mistakes

- Transfer Learning: Capabilities learned in one domain transfer to novel domains

- Efficient Scaling: Multiple agents trained in parallel across diverse environments

World Models as Foundational AI

The field increasingly recognizes that world models are central to AGI development. Genie 3 represents the first production-grade world model achieving real-time interaction, environmental consistency, general-purpose capability, and diverse scenario generation.

Responsible AI and Safety Considerations

DeepMind developed Genie 3 with explicit attention to responsible AI and safety, including:

Collaborative Safety Development

Genie 3’s development involved collaboration with DeepMind’s safety team to identify potential risks in content generation, privacy implications, and environmental bias.

Limited Research Preview Approach

The phased rollout reflects a commitment to gather real-world usage data before scaling, identify misuse patterns early, and refine safety measures iteratively.

Privacy Protection

User-generated worlds remain private and secure. Google does not use generated content for model training without explicit consent—a crucial distinction from some competitors.

The Future of World Models: What’s Next?

While Genie 3 represents a breakthrough, the trajectory of development suggests several near-term improvements:

Extended Consistency Duration

Research teams are working to extend environmental consistency from several minutes to hours, enabling longer agent training and more complex narratives.

Expanded Action Space

Future versions will likely support more nuanced interactions, including complex object manipulation, character animation, and custom physics constraints.

Improved Text Rendering

Dynamic, readable text generation within worlds will unlock new applications in simulation-based learning and testing.

Multi-Agent Scaling

Better support for large-scale multi-agent scenarios will broaden robotics and crowd-simulation applications.

Integration with Large Language Models

Combining world models with language models could enable agents that both understand environments and reason about them.

Conclusion: Standing at the Frontier

Genie 3 represents a qualitative breakthrough in AI. For the first time, a machine can generate fully interactive, physically consistent, explorable worlds from natural language in real time.

The implications ripple across industries:

- Game developers compress months of level design into seconds of iteration

- Roboticists train autonomous systems in infinite safe scenarios

- Educators create immersive learning experiences previously impossible

- Researchers access unlimited training data for AI development

- Scientists visualize complex phenomena interactively

- Filmmakers explore creative possibilities in pre-production

Perhaps most importantly, Genie 3 validates a central hypothesis in AGI research: world models, not language models alone, may be the architectural foundation of genuinely general intelligence. By enabling agents to learn through embodied interaction with diverse simulated environments, Genie 3 provides the training infrastructure that researchers believe is essential for the next leap toward AGI.

As access expands from its current limited preview to broader audiences, we can expect entirely new industries built around interactive world generation, acceleration in robotics development, transformation of education, and new forms of creative expression.

Genie 3 is not just another AI tool—it’s a glimpse of the AI-driven future that researchers have long envisioned but only now begun to realize.

FAQs about Genie 3

What is Genie 3?

Genie 3 is a general-purpose AI world model developed by Google DeepMind that generates fully interactive, explorable 3D environments from simple text prompts in real time.

Who developed Genie 3?

Genie 3 was developed by Google DeepMind as part of its long-term research into world models, embodied intelligence, and artificial general intelligence (AGI).

How is Genie 3 different from AI video generators like Sora?

Unlike video generators that produce fixed, non-interactive clips, Genie 3 creates living worlds that respond to user actions, maintain consistency, and allow exploration over several minutes.

What does “world model” mean in AI?

A world model is an AI system that can understand, simulate, and predict how environments evolve over time, including physics, object interactions, and cause-and-effect relationships.

How does Genie 3 generate environments?

It uses an auto-regressive transformer that generates each frame based on prior frames, user input, and learned environmental dynamics, ensuring continuity and realism.

Does Genie 3 use a traditional physics engine?

No, Genie 3 learns physics implicitly from data rather than relying on hard-coded physics rules or simulation engines.

What kind of physics can Genie 3 simulate?

Genie 3 demonstrates realistic gravity, motion, collisions, fluid behavior, lighting, material properties, and terrain deformation.

What are Genie 3’s technical specifications?

It uses an 11-billion-parameter transformer, renders at 720p resolution, runs at 24 frames per second, and maintains consistency for several minutes.

What hardware does Genie 3 run on?

Genie 3 runs on Google’s TPU v5 infrastructure, enabling real-time generation at approximately 41 milliseconds per frame.

How long can Genie 3 maintain world consistency?

Currently, Genie 3 maintains coherent environments for several minutes, a major improvement over earlier versions but still short of hour-long simulations.

What are the main use cases for Genie 3?

Key applications include AI agent training, robotics simulation, game level prototyping, education, scientific visualization, disaster training, and film pre-visualization.

How does Genie 3 help robotics and AI training?

It allows robots and AI agents to train in thousands of diverse, safe, simulated environments before real-world deployment.

Can game developers use Genie 3 to build full games?

Genie 3 is best suited for rapid prototyping and concept testing rather than producing final, production-grade game assets.

Is Genie 3 useful for education?

Yes, it enables immersive, interactive learning experiences such as historical simulations, science demonstrations, and physics-based exploration.

Is Genie 3 publicly available?

Access is currently limited through Google Labs’ Project Genie and is available primarily to Google AI Ultra subscribers in the US.

How much does Genie 3 cost to access?

Genie 3 access is bundled with the Google AI Ultra subscription, priced at $249.99 per month as of early 2026.

Can Genie 3 recreate real-world locations accurately?

It can generate environments inspired by real places but cannot perfectly replicate specific real-world locations with exact accuracy.

What are Genie 3’s current limitations?

Limitations include short consistency duration, limited text rendering, constrained interaction space, and challenges with complex multi-agent simulations.

Why is Genie 3 important for AGI research?

Genie 3 supports embodied learning, allowing AI systems to learn through interaction with environments rather than text alone, a key hypothesis in AGI development.

Will Genie 3 improve over time?

DeepMind plans to extend consistency duration, expand interactions, improve text rendering, support more agents, and integrate with large language models.

Is Genie 3 safe and responsibly developed?

Yes, it was released gradually with safety oversight, privacy protections, and controlled access to reduce misuse and unintended consequences.

What does Genie 3 signal about the future of AI?

It signals a shift from static content generation toward interactive, experiential AI systems that learn by exploring and acting in simulated worlds.

Leave a Reply

You must be logged in to post a comment.