Sora, developed by OpenAI, is not merely a tool; it’s a revolutionary text-to-video generative AI model that is fundamentally changing how content is conceived and created. Named after the Japanese word for “sky,” it perfectly embodies the limitless creative potential and technical scope it offers.

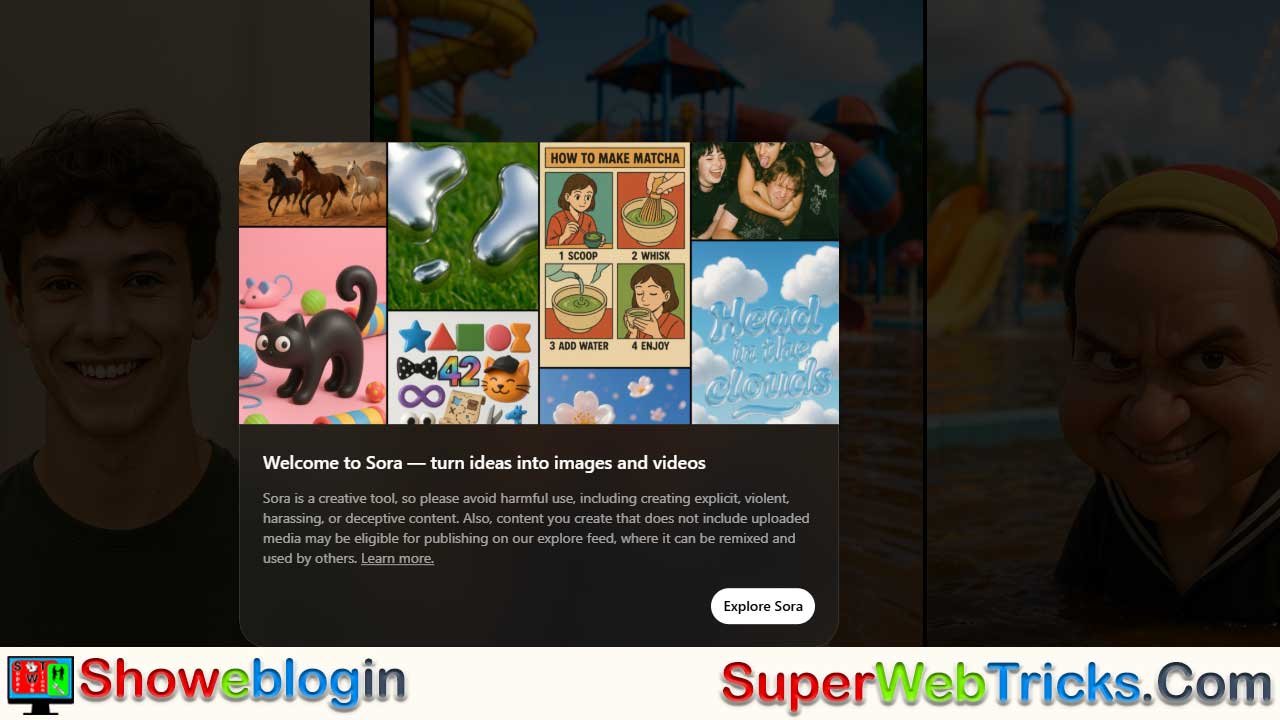

Accessible through the official platform and integrated within the broader ChatGPT ecosystem, Sora empowers users to translate simple text descriptions, images, or short video clips into photorealistic, high-fidelity, and dynamically moving video content.

| Category | Information |

|---|---|

| What It Is | Advanced text-to-video generative AI model by OpenAI |

| Core Tech | Diffusion Transformer (DiT) using space-time patches |

| Input Types | Text prompts, images, short video clips |

| Output | High-fidelity, photorealistic videos up to 60 seconds |

| Resolution | Up to 1080p |

| Strengths | Visual & temporal consistency, complex scenes, realistic physics |

| Features | Image-to-video, video-to-video editing, looping, clip extension |

| Audio | Synchronized audio generation in Sora 2 |

| Applications | Film, marketing, gaming, education, social media |

| Unique Advantage | Deep language understanding for highly precise video generation |

The Technical Genius: Understanding Sora’s Architecture

Sora’s prowess stems from its unique and advanced technical foundation, rooted in the Diffusion Transformer (DiT) model. This architecture represents a significant leap from traditional image or video generation methods.

The Diffusion Transformer (DiT)

At its core, Sora uses a sophisticated diffusion process to generate video. The generation begins with a screen of random static noise. The DiT model is then trained to iteratively denoise this image, sculpting the visual information over many steps.

- Patches: The Building Blocks: Crucially, Sora processes visual information by breaking down images and video frames into smaller, manageable units called patches, analogous to tokens in a large language model (LLM). This unified representation allows the model to handle diverse resolutions, aspect ratios, and durations effectively.

- Temporal and Visual Consistency: By treating video as a continuous sequence of space-time patches, the model maintains visual fidelity (how characters and objects look) and temporal consistency (how they move and interact over time) across the entire 60-second clip. This is a primary technical challenge that Sora has largely overcome, enabling it to simulate physically accurate movement and interactions.

The Role of Language Understanding

Sora is a highly conditioned generative model. It uses the same kind of deep understanding of language that powers large language models like GPT. This allows it to interpret nuanced and complex text prompts accurately, ensuring the generated video aligns precisely with the user’s intent, mood, and creative vision. This deep integration of language makes the difference between generating a generic “dog walking” and generating a video of a “Fluffy golden retriever wearing a tiny raincoat happily splashing through a puddle in a Tokyo alleyway with neon signs reflecting in the water.”

Enhanced Capabilities and Creative Toolkit

Sora offers a powerful suite of features that move it beyond a mere novelty and into the realm of professional production:

- Extended Generation Length: Sora can create videos up to 60 seconds long, a significant duration for generative models, providing ample time for rich scene development and storytelling.

- High Fidelity and Resolution: Generated videos often reach resolutions up to 1080p, delivering cinema-quality visuals with intricate detail, lighting, and texture.

- Multi-Character and Dynamic Scene Support: The model excels at rendering complex scenes involving multiple characters, specific interactions between them, and sophisticated camera movements, such as sweeping crane shots or smooth tracking shots.

- Video-to-Video Editing: Users can upload an existing video and use a text prompt to edit, style, or transform it. For example, changing the weather, turning a live-action scene into an animated one, or adding a new character.

- Image-to-Video Generation: By inputting a single static image, Sora can bring it to life, generating a video that incorporates realistic movement and depth while retaining the visual style of the original picture.

- Seamless Loop and Extension: Sora can generate videos that loop perfectly or extend an existing clip in a forward or backward direction while maintaining temporal consistency.

- Integrated Audio (Sora 2): Recent iterations, such as Sora 2, often include the capability to generate perfectly synchronized dialogue, sound effects, and ambient sounds, eliminating the need for separate audio post-production for basic clips.

Applications and Future Impact

Sora’s impact stretches across numerous industries, democratizing high-quality video production and reducing the barriers to entry for complex visual storytelling:

| Industry | Primary Application | Impact |

|---|---|---|

| Film & Media | Rapid prototyping, pre-visualization, and storyboarding. | Cuts down on expensive and time-consuming pre-production. |

| Marketing & Advertising | Creating highly tailored, scalable ad campaigns quickly. | Enables personalized video ads for niche audience segments. |

| Game Development | Generating realistic textures, environmental effects, and cinematic cutscenes. | Accelerates asset creation and improves visual realism. |

| Education | Producing educational animations and visual demonstrations of complex concepts. | Makes abstract subjects more accessible and engaging. |

| Social Media | Rapid creation of viral, high-quality, short-form content. | Empowers creators with limited resources to produce professional clips. |

Sora represents a pivotal moment, signaling the arrival of truly intelligent generative models that can not only understand language but also accurately simulate the complex, dynamic rules of the physical world described by that language. It positions OpenAI at the forefront of the next era of digital media creation.

FAQs

What is Sora?

Sora is OpenAI’s text-to-video generative AI model that creates high-fidelity videos from text, images, or clips.

How long can Sora’s generated videos be?

Sora can produce videos up to 60 seconds long with strong visual and temporal consistency.

What resolution does Sora support?

Sora commonly outputs videos in high-quality 1080p resolution.

What technology powers Sora?

It uses a Diffusion Transformer (DiT) architecture that generates videos by denoising space-time patches.

Can Sora interpret complex prompts?

Yes, it uses deep language understanding to capture detailed descriptions, emotions, and creative intent.

Does Sora support multi-character scenes?

Yes, it can handle complex interactions between characters and dynamic environments.

Can Sora turn images into videos?

Yes, Sora can animate static images while preserving their visual style.

Can Sora edit existing videos?

Yes, users can apply text-based transformations to uploaded video clips.

Does Sora generate audio?

Sora 2 includes support for synchronized dialogue, effects, and ambient sound.

Can Sora create seamless loops?

Yes, Sora can generate perfectly looping videos or extend existing footage smoothly.

Is Sora suitable for professional workflows?

Yes, its visual fidelity and consistency make it useful for film, ads, and game development.

Can Sora simulate realistic physics?

Sora models accurate motion, lighting, and object interactions across an entire scene.

What industries benefit from Sora?

Film, marketing, gaming, education, and social media creation.

Does Sora support different aspect ratios?

Yes, its patch-based system allows flexible resolutions and aspect ratios.

Can Sora create cinematic camera movements?

Yes, it can simulate crane shots, tracking shots, pans, and other complex movements.

Does Sora require advanced skills to use?

No, it is designed for both beginners and professionals through simple prompt-based control.

Can Sora help with storyboarding or prototyping?

Yes, it rapidly visualizes scenes, making it useful for pre-production.

Is Sora capable of generating stylized content?

Yes, it can create videos in various artistic styles based on instructions.

Can Sora extend videos backward or forward?

Yes, it can generate temporally consistent extensions in both directions.

Does Sora support social media use cases?

Yes, it enables quick creation of highly engaging short-form content.

Leave a Reply

You must be logged in to post a comment.